Symptome:

Man kann über die Remote Console nicht per Copy&Paste in das virtuelle System bzw. aus dem virtuellen System kopieren, wenn der vSphere Client installiert ist.

Die Copy und Paste Option ist deaktiviert.

Grund:

Aufgrund von Sicherheitsrichtlininen sind Copy&Paste Aktionen per Voreinstellung ab dem VSphere Client 4.1 standardmäßig deaktiviert

Lösung:

Um das Problem zu beheben, muß man diese Optionen über den VSphere Client wieder aktivieren.

Um die Copy&Paste Option für eine bestimmte virtuelle Maschine zu aktivieren geht man folgendermaßen vor:

Hinweis: Die VMware Tools müssen auf dem Client installiert sein, damit Copy&Paste funktionieren kann!

- Loggen Sie sich über einen vCenter Server mittels des vSphere Clients ein und fahren Sie die betreffende virtuelle Maschine runter.

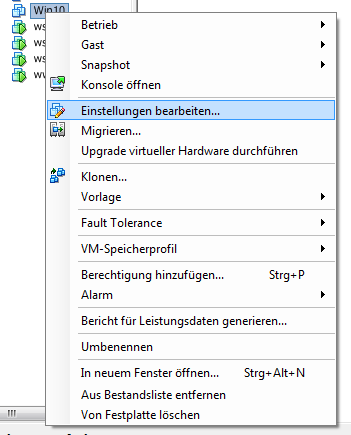

- Klicken Sie mit der rechten Maustaste auf die virtuelle Maschine und wählen Sie den Menüpunkt „Einstellungen bearbeiten“.

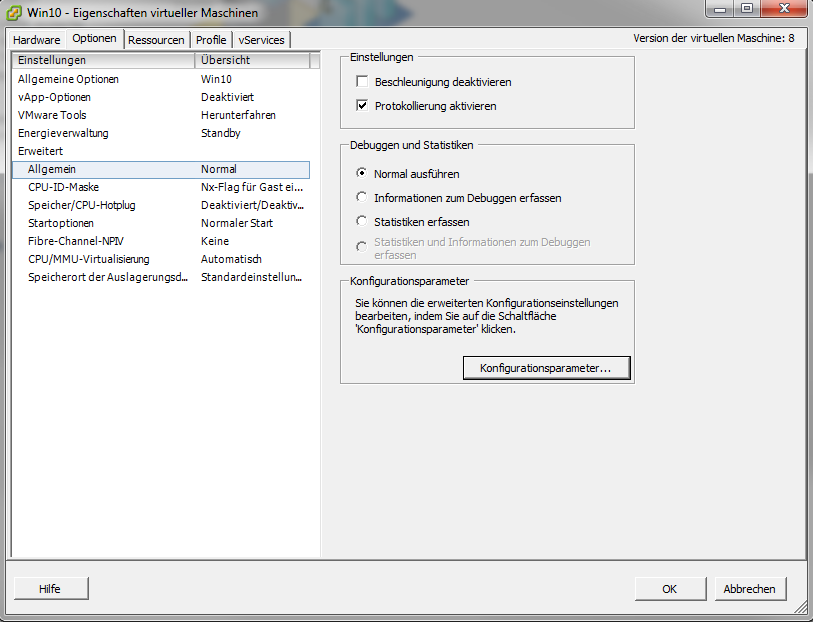

- Navigieren Sie zum Reiter Optionen und dann zu Erweitert > Allgemein

- Klicken Sie dann auf den Button „Konfigurationsparameter”

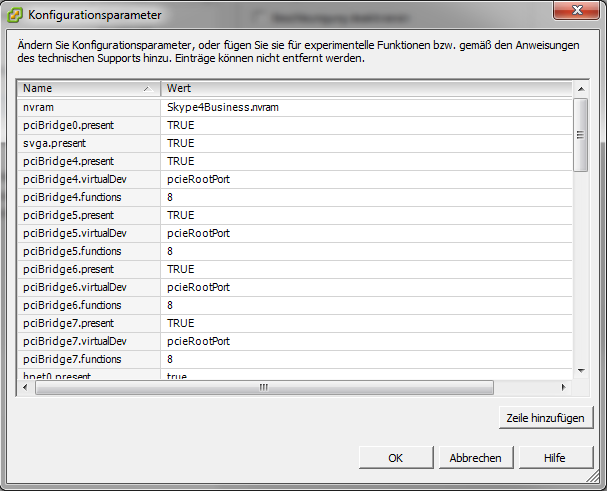

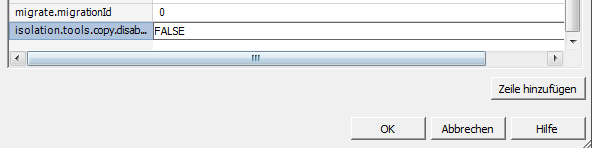

- Klicken Sie auf „Zeile hinzufügen“ und tragen Sie folgende Werte in die Spalten Name und Wert ein:

isolation.tools.copy.disable FALSE

isolation.tools.paste.disable FALSE

- Klicken Sie auf „OK“, um die Einstellung zu speichern und nochmals auf „OK“, um den Eigenschaftendialog zu schließen.

- Starten Sie nun die virtuelle Maschine und die Zwischenablage sollte funktionieren.

Um die Copy&Paste Option für alle virtuellen Maschinen auf dem ESXi/ESX Host zu aktivieren geht man folgendermaßen vor:

- Im ESXi/ESX Host als Root-User einloggen

- Ein Backup der /etc/vmware/config Datei erstellen

- Dann die /etc/vmware/config Datei in einem Textediter öffnen, z.B. vi

- Folgende Einträge hinzufügen:

vmx.fullpath = „/bin/vmx“

isolation.tools.copy.disable=“FALSE“

isolation.tools.paste.disable=“FALSE“

- Dann Datei abspeichern und schließen.

- Die Copy&Paste Funktion wird erst aktiviert, wenn die virtuellen Maschinen neu gestartet werden. Dies muß über den vSphere Client geschehen, nicht über das virtualisierte Betriebssystem.

Achtung: Diese Optionen bleiben beim Upgrade zu einer neueren Version nicht bestehen! Die Änderungen müssen dann erneut durchgeführt werden.

Content retrieved from: https://industry-electronics.de/knowhow/vsphere-cut-and-paste.